projects

Diffusion Based Planning

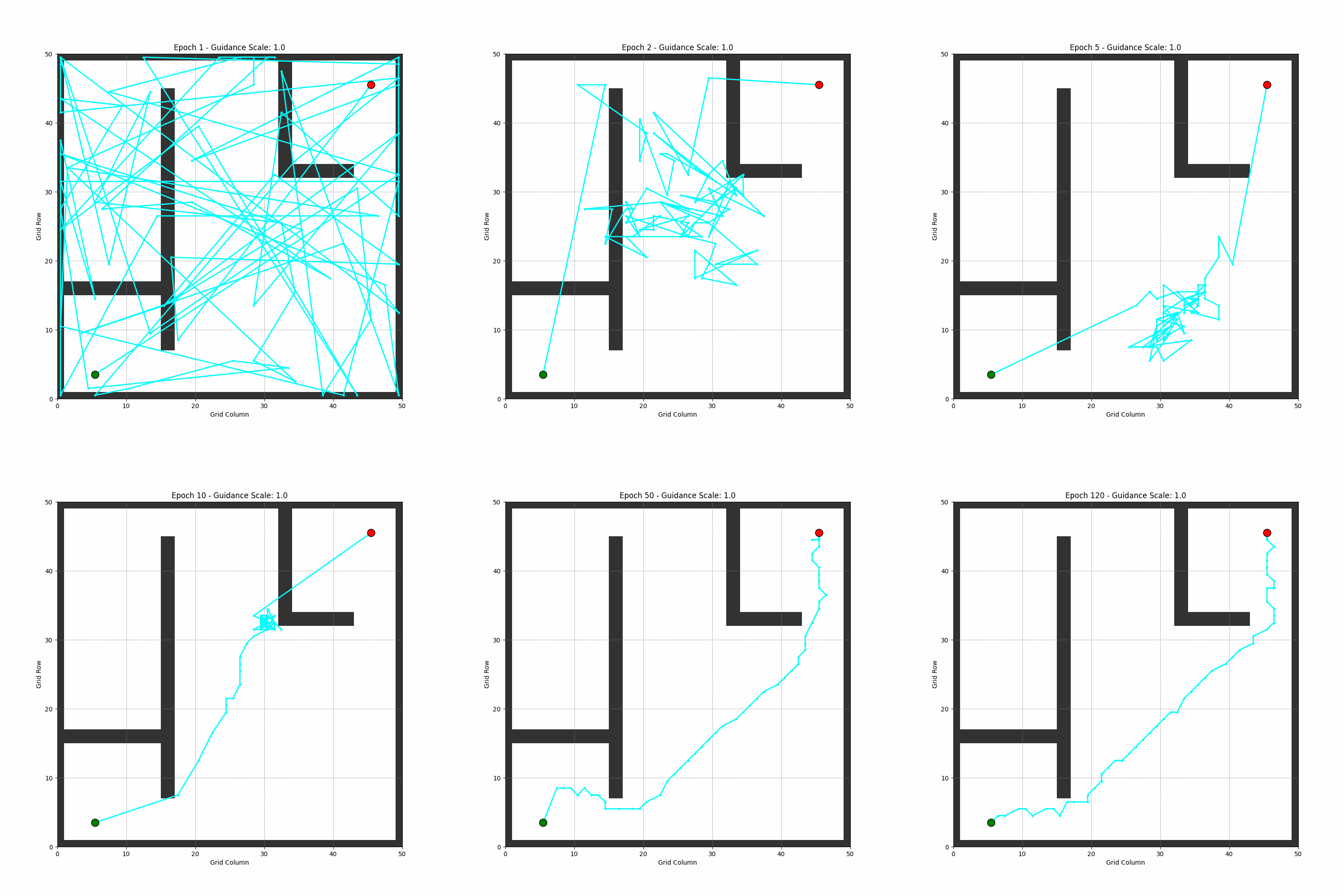

This project implements a Denoising Diffusion Probabilistic Model (DDPM) to learn a prior distribution over smooth, collision-free robot trajectories from expert demonstrations. The system consists of three main components: expert trajectory generation, model training, and inference.

1. Expert Trajectory Generation

We create a dataset of expert trajectories using A* search algorithm on a grid-based map with obstacles. These trajectories serve as the ground truth for training the diffusion model.

Key features:

- Creates a binary obstacle map with walls and barriers

- Generates start and goal points in free spaces

- Uses A* search to find optimal paths

- Normalizes and processes trajectories to a consistent format

- Saves the dataset with trajectories and their conditions (start, goal, obstacle map)

2. Model Training

We implement a diffusion model training pipeline.

Key components:

- We load the expert trajectories and their conditions

- Implements a conditional UNet architecture that takes trajectory state, time embedding, and environmental conditions

- Trains the model to denoise random Gaussian noise into expert trajectories

- Uses classifier-free guidance (CFG) for improved conditioning

- Saves model checkpoints during training

3. Inference

Key features:

- Loads trained model checkpoints

- Implements the reverse diffusion process with classifier-free guidance

- Generates smooth, collision-free trajectories between any valid start and goal

- Visualizes the results with the obstacle map

Denoising Process Visualization

The diffusion model works by gradually denoising a random Gaussian noise distribution into a coherent trajectory. The following visualization shows this process:

Research Posters

Poster 1: The proposed "Stein Coverage" algorithm improves multisensor deployment by optimally matching sensor locations to event distributions using Stein Variational Gradient Descent (SVGD). It introduces a repulsive term to reduce coverage overlap and interference, creates a utility map highlighting critical regions, and solves the deployment as a linear assignment using the Hungarian algorithm.

Robot Teleoperation

A robot teleoperation system using hand gesture recognition from ultra-wideband sensor data, leveraging Deep Neural Networks

Video Demos

Robot Follower using YOLO

We used You only look once (YOLO), a real-time object detector for a tracking problem. The robot tracks the human and follows the human using a simple controller

Robot Teleoperation using Hand Gestures :

We propose to classify simple Human Hand Gestures (Go, Right, Left) using Ultra-Wide Band(UWB) sensor data. This project integrates machine learning techniques for potential robotic applications

YouTube Demo

YouTube demonstration of our research results.